If brain cells communicated with each other using voice, there would be a constant hubbub in our heads. Neurons not only constantly exchange signals, reacting to external impulses, but also produce background “noise”, jerking each other on business or not. Ironically, this is what seems to give human intelligence an advantage over artificial intelligence. Drawing on research from scientists at the University of Frankfurt, the Max Planck University of Florida for Neuroscience, and the University of Minnesota, Nautilus Editor-in-Chief Michael Segal explains how instability in living neural networks relates to thinking and imagination. T&P publishes the translation with some abbreviations.

One of the main problems of modern artificial intelligence can be illustrated by the example of a yellow school bus.

If it is depicted from the windshield, the trained neural network will accurately recognize it. If he is lying on his side across the road, the algorithm will confidently assume that there is a snowplow in front of him. And when viewed from below and at an angle, the neural network will even decide that this is a garbage truck. It's all about context. When the next image is very different from previously loaded images, the recognition mechanism falters, even if the difference is that the desired object is rotated or partially blocked by another. Modern AI models are still not up to par with the human brain and are therefore less able to navigate unfamiliar situations.

Noisy brain

Computers are digital devices and operate on binary elements that can be either on or off. The outgoing signal of a neuron is also similar to a binary code - the neuron is either active or not at each moment of time - but at the input, the neuron works like an analog device: the data is non-discrete, and its characteristics are influenced by many factors.

In addition, human-built computing systems are strictly deterministic: giving the same command several times, you will always get the same result. In the human brain, everything works differently - the reaction to the same stimulus will always be different.

Perhaps it's all about unreliable neurotransmission, that is, failures in signal movement at synapses. During neuron activity, a signal travels along the axon, but the probability that it will reach the next neuron is only 50%. Because of this, interference occurs in the system.

Another factor is constant activity in various parts of the brain. For example, the visual cortex is activated by visual images, but simultaneously receives signals from other sources because the brain has many cross-connections. This allows us to navigate the context and form a horizon of expectations: thus, having heard a dog barking, we hasten to turn around warily to find it with our eyes.

Even in the absence of visual stimuli, the visual cortex shows the same activity as in its presence, exhibiting something like visual imagery. You see something, at the same time you think about what you saw yesterday - perhaps this also contributes to the fact that the reaction to the same stimuli changes from time to time.

Finally, experiments and models have shown that activity in the brain can occur across areas that are far apart from each other. This is common in mature cortex, where long-range connections are anatomically formed. However, even at an early age, when there are no physical connections between different areas of the brain, a correlation between their activity is already observed.

Scientists have created an intravenous brain-computer interface

At the end of last year, scientists, having published their article in the Journal of NeuroInterventional Surgery, presented to the scientific community a new device for controlling a computer “with the power of thought.” Those who are interested in neuroscience or Elon Musk know that with the help of electrodes immersed directly in the brain tissue, you can “read” its electrical activity and then, using a decoder that deciphers the signal, feed it into a neural interface - a computer or neuroprosthesis. Another option is to use implants to stimulate the desired parts of the brain. In both cases, the brain-computer interface is a bulky and unattractive “cap” of wires, the use of which, for example, in everyday life is difficult. Another thing is a reliable device hidden from prying eyes, which allows, for example, paralyzed patients to open messages or make bank transfers directly from their home computer. Whether this device was a breakthrough or just another prototype - read in our article.

Schematic representation of an intravenous neural interface. Credit: Thomas J Oxley et al / Journal of NeuroInterventional Surgery 2020

In case of acute brain damage (stroke or trauma), a person somehow remains with healthy nervous tissue, which, due to neuroplasticity, will “become involved” in restoring lost functions. If the lost area is too extensive, or control of the body is impossible due to the death of spinal cord neurons, then it is not rehabilitation that comes to the rescue, but a neural interface.

A group of Australian scientists led by Thomas J Oxley managed to install a reading electrode unit through the venous system near the healthy (at the time of the study) motor center of the brain (precentral gyrus) in two patients with amyotrophic lateral sclerosis. Despite the fact that the electrodes were not inserted deep into the brain tissue, but were held in the lumen of the vein by a stent, they quite accurately collected impulses from the motor cortex, and a transcutaneous infrared decoder connected to the brain unit with a flexible wire converted the signals into keystrokes of a virtual mouse in a popular operating system . Patients could control the cursor using an “eye tracker,” that is, movements of the eyeballs, since this function does not suffer in this disease (unlike “locked-in syndrome”).

You need to understand that after implantation of the neural interface, patients needed time to learn. For six weeks, they had to imagine how the paralyzed hand clicked on the mouse once or twice, quickly or slowly. During these tasks, the device memorized the electrical activity of the cortex so that the decoder could then correctly convert it into a command for the home computer.

After the training, patients could make purchases on the Internet, use mobile banking, and type text on a virtual keyboard using “clicks.” It is important to note the inertness of the fibers placed in the venous bed (none of them caused venous blockage or a source of infection), reliability (after 12 months in one patient the stent remained in the same place), speed (comparable to intracerebral electrodes) and ease of use devices at home.

“These first-in-human data demonstrate the potential of an endovascular motor neuroprosthesis to digitally control a device using multiple commands in people with paralysis... to improve functional independence,” says Thomas Oxley.

Thus, we see good functioning of the new intravascular neurointerface, but in a limited group of patients. In the future, larger groups of subjects will help develop more precise study protocols and adjust safety profiles.

Text: Marina Kalinkina

Motor neuroprosthesis implanted with neurointerventional surgery improves capacity for activities of daily living tasks in severe paralysis: first in-human experienceby Thomas J Oxley, Peter E Yoo, Gil S Rind, Stephen M Ronayne et al. in Journal of Neurointerventional Surgery. Published October 2021.

https://dx.doi.org/10.1136/neurintsurg-2020-016862

Where is the noise coming from?

Interestingly, patterns of spontaneous neuronal activity emerge early in brain development, before sensory perception is formed. For example, spontaneous activity in the visual cortex is observed even before the child first opens his eyes. After this, the imagination is connected with real visual images.

Spontaneous activity is inherent in us from an early age, but its nature is still unknown. Perhaps it is genetically predetermined, but it is most likely associated with the process of self-organization (how a self-organizing system can form complex patterns is described in the theory of dynamic systems). One might assume that our entire mind is determined by genes, but this is impossible:

There is too little information in DNA to identify all the many synaptic connections in the brain. Only a few simple algorithms can be genetically encoded

for the early stages of brain development, which will give impetus to the creation and development of a certain structure. The starting point for such a process can be just a few basic rules—for example, how neurons form circuits and how circuit activity in turn changes connections in a feedback loop.

BRAIN CELLS

The cells of the central nervous system are called neurons; their function is information processing. There are from 5 to 20 billion neurons in the human brain. The brain also includes glial cells; there are about 10 times more of them than neurons. Glia fill the space between neurons, forming the supporting framework of nervous tissue, and also perform metabolic and other functions.

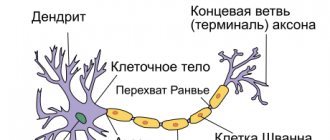

The neuron, like all other cells, is surrounded by a semipermeable (plasma) membrane. Two types of processes extend from the cell body - dendrites and axons. Most neurons have many branching dendrites but only one axon. Dendrites are usually very short, while the length of the axon varies from a few centimeters to several meters. The body of a neuron contains a nucleus and other organelles, the same as those found in other cells of the body (see also CELL).

Why is everything so complicated?

The theory of attractor neural networks states that all their activity is reduced to a finite set of states. For a certain set of incoming signals, the network enters one of the possible states; with others, but similar ones, to the same state. This makes it resistant to small signal fluctuations and interference. The theory has been discussed for several years now, but so far it has not been possible to obtain adequate experimental evidence that the brain works this way. We would love the ability to record the state of a sufficiently large number of brain cells under sufficiently stable conditions—and also tools that would allow us to activate them directly.

Perhaps there is a point to the unpredictability of the brain.

Looking at the same object, we always perceive it a little differently, and it is this variability in the reaction to the same stimulus that helps us discover new facets of familiar things.

Any visual image consists of thousands of details. By highlighting the main thing, we weed out the unnecessary. This is similar to evolution, when, as a result of chaotic mutations, the fittest individuals survive. By analogy, one might assume that the brain adds “noise” to review many possible images and find the most appropriate one for a given context.

Is it possible to unravel the algorithm of spontaneous activity? Probably. One option is to study twins. Another is to observe the early stages of spontaneous activity.

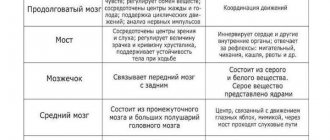

Brain stem

located at the base of the skull. It connects the spinal cord to the forebrain and consists of the medulla oblongata, pons, midbrain and diencephalon.

Through the midbrain and diencephalon, as well as through the entire trunk, there are motor pathways going to the spinal cord, as well as some sensory pathways from the spinal cord to the overlying parts of the brain. Below the midbrain there is a bridge connected by nerve fibers to the cerebellum. The lowest part of the trunk - the medulla oblongata - directly passes into the spinal cord. The medulla oblongata contains centers that regulate the activity of the heart and breathing depending on external circumstances, as well as controlling blood pressure, peristalsis of the stomach and intestines.

At the level of the brainstem, the pathways connecting each of the cerebral hemispheres with the cerebellum intersect. Therefore, each hemisphere controls the opposite side of the body and is connected to the opposite hemisphere of the cerebellum.

Play interference

Deep neural networks, the most successful type of AI, are created in the image and likeness of the human brain: they have neurons, a unique hierarchy, and flexible connections. But there is debate about whether such networks can reproduce the processes that occur during signal processing in the brain.

One of the things that deep neural networks are often criticized for is that they are directly bottom-up: the signal is passed from input to output through a series of intermediate layers without any looping. Recurrent connections (for example, between neurons of the same level) are either absent or modeled rather crudely; Typically, there are no downlinks that carry the signal from the output to the input. Such connections make it difficult for a neural network to learn—but the cerebral cortex is full of them! A feed-forward network is a gross oversimplification, very different from the tightly interconnected parts of the brain.

Typically, neural activity in the human brain is a continuous cross-talk between different areas of the brain, where the sensory stimulus plays only a modulating role.

Deep neural networks work very differently: they are activated only when input data is available. What does this lead to? For example, to the inability of AI to grasp the context: a deep network is trained on a specific set of data and fails when fundamentally new information appears. While, according to one theory, spontaneous activity in the brain encodes context. Perhaps this activity represents the neural basis of visual processing, during which we establish relationships between different objects (and their parts) in space. This assumption is a bit far-fetched, because the functionality of this spontaneous activity is not yet fully understood, but it is already clear that it can play an important role in the interpretation of events.

We can only draw inspiration from observations of the brain. For example, the unreliability of synaptic connections mentioned above is already being emulated in machine learning to avoid “overfitting” (a situation when a model built by a neural network explains examples from the training set well, but does not work on examples that did not participate in training. - T&P

).

Where can you study on the topic #artificial intelligence

Well

Artificial intelligence: how it works in business

February 13, 2021 – April 2, 2019

Well

Deep Learning. Advanced course

February 9, 2021 – June 6, 2019

Well

HOW THE BRAIN WORKS

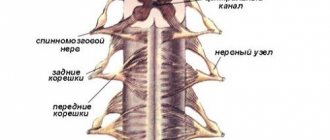

Let's look at a simple example. What happens when we pick up a pencil lying on the table? The light reflected from the pencil is focused in the eye by the lens and directed to the retina, where the image of the pencil appears; it is perceived by the corresponding cells, from which the signal goes to the main sensitive transmitting nuclei of the brain, located in the thalamus (visual thalamus), mainly in that part of it called the lateral geniculate body. There, numerous neurons are activated that respond to the distribution of light and darkness. The axons of the neurons of the lateral geniculate body go to the primary visual cortex, located in the occipital lobe of the cerebral hemispheres. Impulses coming from the thalamus to this part of the cortex are converted into a complex sequence of discharges of cortical neurons, some of which react to the boundary between the pencil and the table, others to the corners in the pencil’s image, etc. From the primary visual cortex, information travels along axons to the associative visual cortex, where image recognition occurs, in this case a pencil. Recognition in this part of the cortex is based on previously accumulated knowledge about the external outlines of objects.

Planning a movement (i.e., picking up a pencil) probably occurs in the frontal cortex of the cerebral hemispheres. In the same area of the cortex there are motor neurons that give commands to the muscles of the hand and fingers. The approach of the hand to the pencil is controlled by the visual system and interoceptors that perceive the position of muscles and joints, information from which is sent to the central nervous system. When we take a pencil in our hand, the pressure receptors in our fingertips tell us whether our fingers have a good grip on the pencil and how much force must be exerted to hold it. If we want to write our name in pencil, other information stored in the brain will need to be activated to enable this more complex movement, and visual control will help improve its accuracy.

The example above shows that performing a fairly simple action involves large areas of the brain, extending from the cortex to the subcortical regions. In more complex behaviors involving speech or thinking, other neural circuits are activated, covering even larger areas of the brain.

What the brain can do

Scientists have calculated that the human brain can accommodate the number of bytes, expressed as a number with 8432 zeros.

The great chess player Alekhine could play from memory “blindly” with 30–40 partners.

Julius Caesar knew by sight and remembered by name his entire army. And this is 25,000 soldiers.

A certain Gasi remembered by heart all 2,500 books that he read during his life.

Muscovite Samvel Gharibyan reproduces without error 1000 words dictated to him, chosen in random order from ten languages.

Academician Sergei Chaplygin could accurately name the phone number that he accidentally called five years ago just once.

Englishman Domenic O'Brien received a written ban from visiting gambling houses. His unique memory allowed him to know all the cards remaining in the deck.

BRAIN NEUROCHEMISTRY

Some of the most important neurotransmitters in the brain include acetylcholine, norepinephrine, serotonin, dopamine, glutamate, gamma-aminobutyric acid (GABA), endorphins and enkephalins. In addition to these well-known substances, there are probably a large number of others functioning in the brain that have not yet been studied. Some neurotransmitters only act in certain areas of the brain. Thus, endorphins and enkephalins are found only in the pathways that conduct pain impulses. Other neurotransmitters, such as glutamate or GABA, are more widely distributed.